Data Management and Processing in Microsoft Fabric

Uncover Microsoft Fabric's power! Secure OneLake data, optimize with Apache Spark, and master lakehouse vs. warehouse structures. Elevate your data game today!

Trusted by 220,000+ people worldwide.

An outline of this training course

Designed to enhance your data management skills within the Microsoft Fabric ecosystem. Learn to upload and manage data securely, utilize shortcuts for workflow efficiency, and differentiate between lakehouse and warehouse architectures. Gain hands-on experience with Apache Spark in data engineering, using notebooks in web and VS Code environments, and master techniques for monitoring Spark jobs. This curriculum equips you with the essential skills for effective data analysis and management!

What is needed to take this course

Participants should understand cloud computing and data management basics, have programming skills in languages like Python or SQL, and access to Microsoft Fabric. Familiarity with Apache Spark, Visual Studio Code, a reliable computer with internet, and analytical skills will also enhance your learning experience.

Who is this course for

Designed for data engineers, data scientists, IT professionals, developers interested in data analytics, and educators or students in data-focused fields, aiming to enhance their expertise in cloud-based data management and analysis with Microsoft Fabric.

Details of what you will learn in this course

During this Microsoft Fabric course, participants will learn:

-

Navigating OneLake: Understand how to upload, manage, and organize data within OneLake, Microsoft Fabric's data lake solution, including using OneLake Explorer for file management.

-

Data Security: Master the concepts of authentication and authorization to secure data within the Microsoft Fabric environment, ensuring that sensitive information is protected and access is controlled.

-

Workflow Optimization: Discover how to use shortcuts within Microsoft Fabric to streamline data workflows, making data processing tasks more efficient and less time-consuming.

-

Lakehouse vs. Warehouse Architecture: Gain insights into the differences between lakehouse and warehouse architectures in data management, learning how each can be leveraged for different types of data storage and analysis needs.

-

Apache Spark Fundamentals: Dive deep into the essentials of Apache Spark for data engineering within Microsoft Fabric, understanding its role in processing large datasets for analytics purposes.

-

Notebook Usage: Get hands-on experience with notebooks in both web-based and Visual Studio Code environments, learning how to execute code, analyze data, and visualize results interactively.

-

Monitoring Spark Jobs: Learn techniques for monitoring and optimizing Spark jobs to ensure efficient processing of data tasks, including using the monitoring hub and Spark UI for performance insights.

What you get with the course

This course will provide participants with detailed Microsoft Fabric materials, hands-on exercises, expert insights on Spark jobs, and interactive notebook sessions. Participants will earn a completion certificate and join a community for support and networking, equipping them with practical skills and knowledge in data management and analysis.

Program Level

Intermediate

Field(s) of Study

Data Engineering, Cloud Computing, Data Analytics

Instruction Delivery Method

QAS Self-study

Credits To

- Henry Habib

- The Intelligent Worker

- Sawyer Nyquist

- Hitesh Govind

***This course was published in March 2024***

Enterprise DNA is registered with the National Association of State Boards of Accountancy (NASBA) as a sponsor of continuing professional education on the National Registry of CPE Sponsors. State boards of accountancy have final authority on the acceptance of individual courses for CPE credit. Complaints regarding registered sponsors may be submitted to the National Registry of CPE Sponsors through its website: www.nasbaregistry.org

What our

Students Say

Curriculum

Course Overview

Navigating and Utilizing OneLake

Enhancing Workflows

Advanced Data Processing Techniques

Utilizing Spark and Notebooks

Data Warehousing Concepts

Conclusion and Next Steps

Your

Instructor

EDNA Experts

Frequently Asked

Questions

What’s the difference between a free account and a paid plan?

Do I need to know anything about data science or data analytics to get started with Enterprise DNA?

How will I be charged?

Can I get an invoice for my company?

Are refunds available?

Will AI take over the world and make data skills worthless?

Recommended

Courses

Mastering Automation with Power Automate

Building and Deploying AI-Driven Apps

AI App Development Beginners Guide

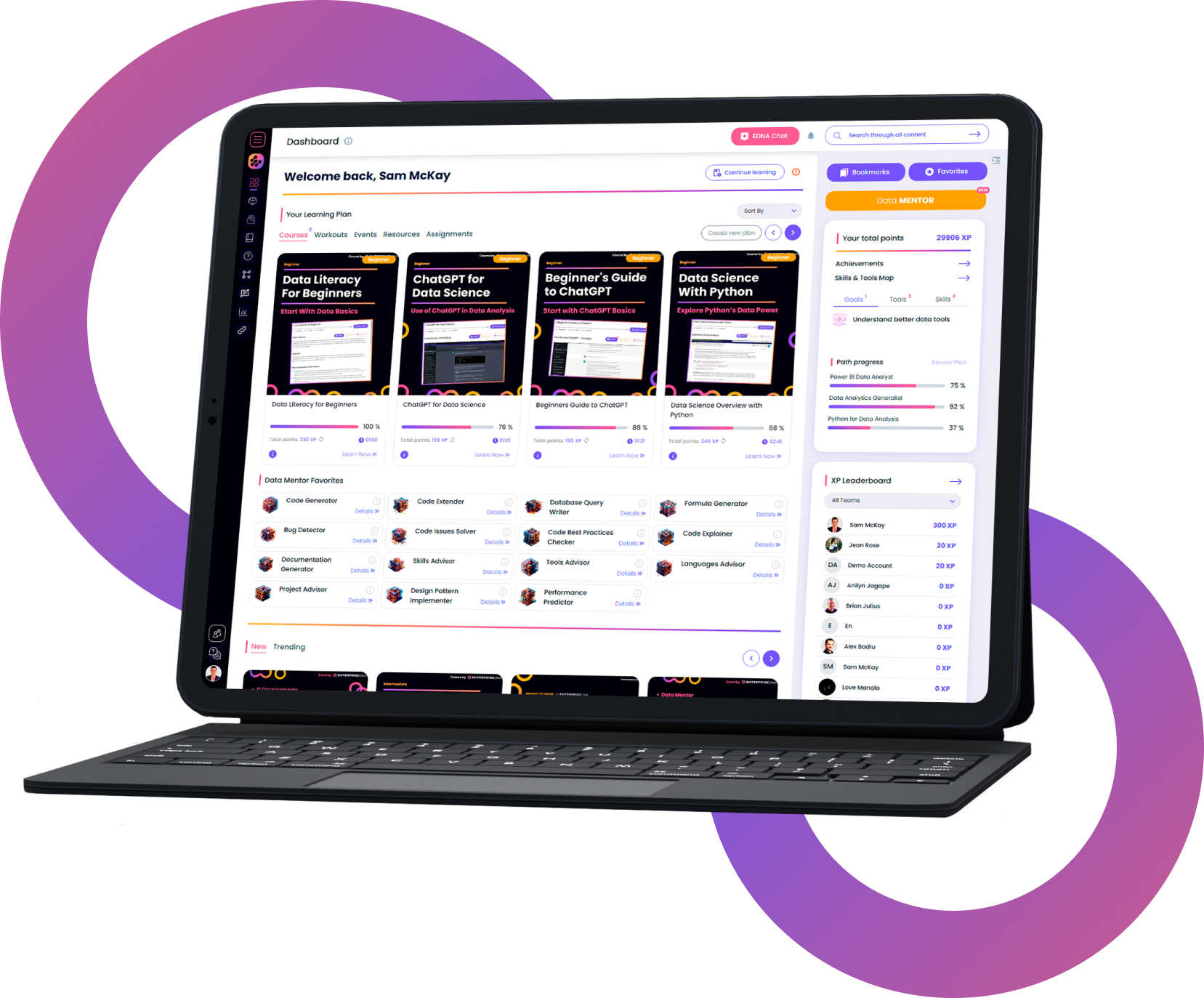

Get full access to unparalleled

training & skill-building resources

FOR INDIVIDUALS

Enterprise DNA

For Individuals

Empowering the most valuable data analysts to expand their analytical thinking and insight generation possibilities.

Learn MoreFOR BUSINESS

Enterprise DNA

For Business

Training, tools, and guidance to unify and upskill the data analysts in your workplace.

Learn More